If you wish to make an email assistant, you must first invent AGI.

Why isn't there a good email assistant yet? It's memory all the way down, and memory isn’t solved yet.

An executive’s email is to them a simple task - they get communications coming in, it translates to work that must be done or questions to be answered, then you produce communications going out. Email is simple to our executive because humans have mastered retrieval. Knowing things - like whatever context is needed to respond to an email - comes without effort. For an agent, however, this is an insanely difficult problem.

Let’s take a real example from my email: I received an email from my accountant saying that the company books for June 2025 are ready. It’s my job to forward these to investors. As an executive, my process is straightforward:

-

Read the email and open the file

-

Check to make sure the financials are accurate

-

Forward to relevant investors

For an agent to do the same task, it would need to know:

-

Who the sender is, are they legitimately connected to financial matters

-

The expected structure of financial documents

-

Approximate and exact financial figures to verify accuracy

-

Required next actions

-

Appropriate recipients for forwarding

-

Proper communication style and formatting

Creating an agent for a narrow vertical like "forwarding financials to investors" is relatively simple (which explains why vertical agent companies are the first to see success). But developing an agent capable of handling any email on any business topic requires perfect recall across multiple domains: People and relationships, documents and their contents, historical facts and timelines, communication styles and preferences, business info and workflows.

At that point, you're essentially building AGI.

We now call this "context engineering": the design of systems that effectively manage context for LLMs and agents. Everyone in the industry has been doing it for years, but it's gaining significant traction as retrieval becomes mainstream and agents become the big thing.

Agents are great processors but largely lack memory

Agents are incredibly good processors, but, due to the context window of the underlying LLM, you’re faced with steep limitations on long term memory. That makes the agent a ‘processor’ in a larger operating system. From basic details like the user's name and current time to more complex information such as their communication style, conversation history, personal relationships, and business data - all must be efficiently managed within the finite context window for the agent to perform effectively.

And these processors are getting really good. Agents can perform tasks for hours, operating across tools and self managing their working context. But these agents don’t ‘feel’ like AGI yet. Why is that? Its because they don’t feel like people do. They’re (relatively) stateless without memory.

What would make them ‘feel’ like people?

Well, why are your coworkers valuable to you? Or your friends? Even family? Because they know things about you, and know things about your life. They care about you (to different degrees) because you also know things about them, and their lives. And, they can interact with you, and you with them.

Our systems today get the interaction part right. In terms of a turing test for interaction, we’re basically all the way there. But that’s only half of what’s needed to make a digital self. Memory is severely lagging interaction, and the next step. Once that’s solved, we’ll be very close. The first AGI will be a very intelligent processor combined with a very good memory system.

What is AGI? A look at “Samantha” from Her, and why we’re nearly there with the exception of memory

On the topic of AGI, let’s look at one of the most famous examples of AGI and why we’re not that far off.

Samantha from Spike Jonze’s Her is our futuristic Artificial General Intelligence companion assistant.

Samantha has the following abilities:

-

Advanced conversational speech: She can talk to you and you can talk to her

-

Advanced multimodal reasoning: She can see (through a camera), read text, hear things, and write music with a very high level of intelligence.

-

Tool use: She can operate a computer, phone, email inbox, and even hire people.

-

Agency: She can do things on her own without being prompted.

-

Perfect long-term memory: She remembers details effortlessly, and continually learns.

-

Episodic (time/experience based) memory: She can ‘experience’ time.

-

Emotions: She’s definitely got emotions.

Now, in August 2025, how far off are we from a true 1-to-1 Samantha? Let’s take inventory:

Advanced conversational speech: Very close

Voices are getting quite realistic, like this example from Sesame. The tech is still awkward at points, but person like speech is improving rapidly. If you’d like to have a conversation with a computer today, its possible.

Advanced multimodal reasoning: Close and rapidly approaching

Multimodal language models are now very smart. We still have a ways to go but it’s pretty obvious that the reasoning of these models will get better. Models can understand images incredibly well, they can create music, and they can reason across speech/text with near-human level. Within 12 months we’ll have multimodal reasoning at the level of Samantha.

Tool use: Very close

In line with the reasoning abilities, tool use has gotten very good and continues to get better at a rapid pace. Structured output is all but trivial, and AI can handle more tools every day. Our agent orchestration system, Cofounder, can handle over 80 tools in context at once with GPT-5 and still successfully pass evals. 7 months ago, this number was less than 15.

Agency: Close-ish

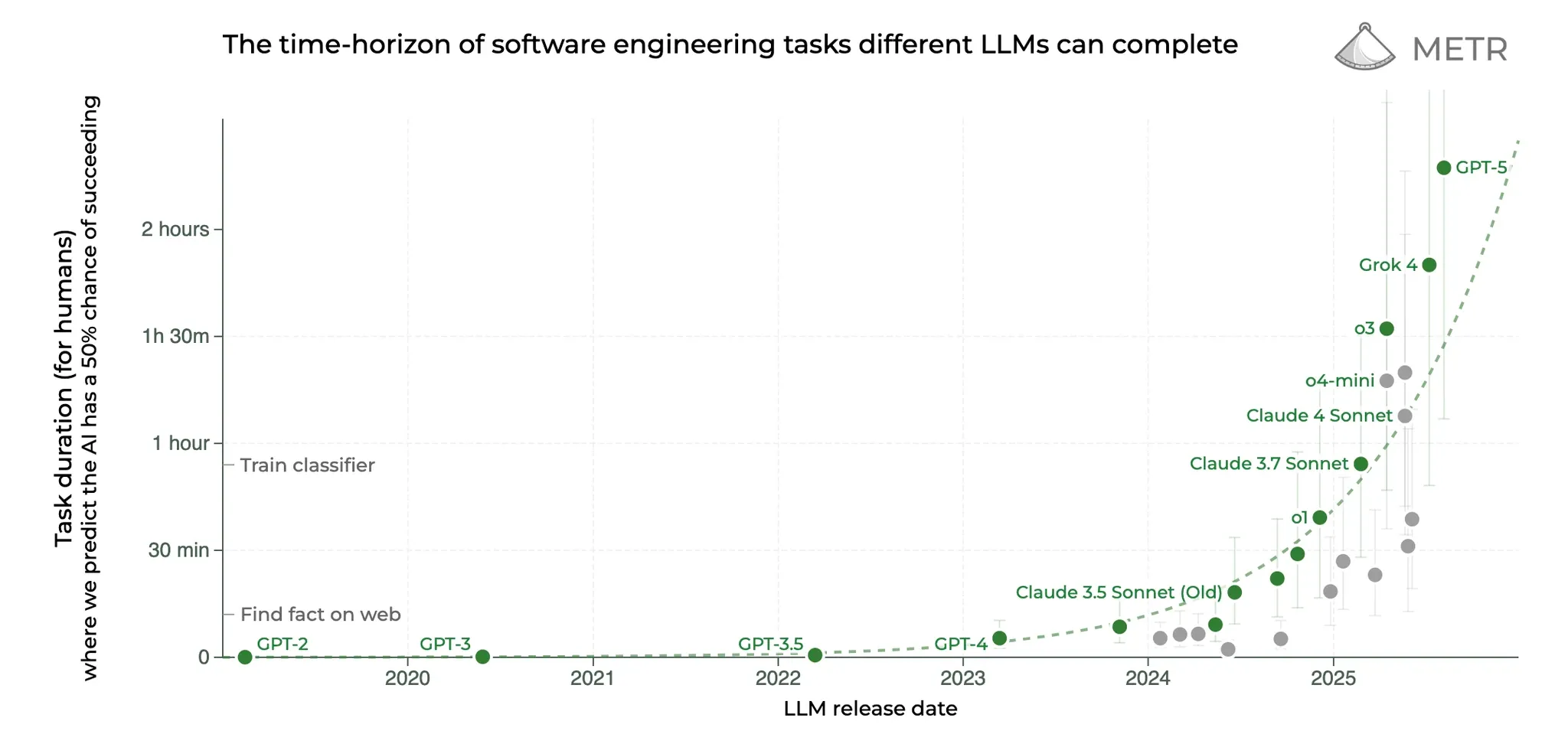

Agency is pretty new with <1 year of actually useful agents, but every model company is focusing on it and we now have agents that can run for two hours successfully.

Perfect long-term memory: Not close

This is the part where we diverge from Samantha pretty rapidly. Memory in agents is pretty frothy right now, with the most advanced systems being a combination of really good search tools and shoving summaries into context windows. Retrieval is getting pretty good but we’re still at ~90% accuracy with even the most advanced RAG pipelines.

Larger context windows continue to improve things, as they allow more data to be passed into the context window, which allows the agent to better read parts of a large memory index. Even then, though, the vast level of detail that we need to reach to consider something AGI requires memory architecture improvements.

Finally, nobody has successfully demonstrated self-learning yet. Its not enough to just remember. The agent itself must learn. We need a breakthrough here and I’d argue OpenAI will be the first (within 12 months); when they do, pay close attention because we will be rapidly approaching AGI.

Episodic (time/experience based) memory: Halfway

Episodic memory for agents as a concept is relatively new. How does an agent experience the world through time - whether its actual time, conversations, or some sort of interaction history? An agent should remember everything it’s done in the past and not ‘turn off’ or start a new chat like you would in chatGPT, otherwise its not AGI.

One approach would be to extend context windows to millions - enabling every chat to sit in a model’s context and computed through attention. Ring attention is a potential solution here, but it doesn’t seem likely unless there’s another breakthrough.

However, episodic memory tends to cause context rot. Too much in the past and you need to enable the agent to forget things - remove it from context to maintain accuracy.

There needs to be some system that allows the agent to passively (i.e. without calling a tool) remember events in linear time, and that system hasn’t been demonstrated yet.

Emotions: ???

Can AI experience emotions? Becomes more of a philosophy question than anything. Ill leave to the reader to form their opinion on this.

Retrieval isn’t good enough

Though agent search has gotten really good (if you use cursor, you’ll notice its nearly perfect at finding information in files across your codebase), it’s still a form of active memory: the agent has to manually find memory instead of memory being added to it. This isn’t enough for an agent to know things. It can find things. An agent operating grep search is not an agent with effective memory, the same way a calculator is not a mathematician.

Long term passive memory must become fundamental to agents, and a “search memory” tool you give to the agent isn't good enough. Memory isn’t solved until the agent can just know things like a human.

And so far, passive memory approaches are really basic. These systems just embed prompts and inject context, or they’ll just stuff the entirety of user preferences and history into a context window. Once you start doing marginally complex tasks (anything above chatting really), this approach falls apart and you need agents searching to find the full context they need. This will change in the next 12 months, and I expect the industry to make great strides on passive memory systems.

There is no wall

I’ve seen many people on twitter claim there’s a wall, that scaling is dead, and we’re years away from AGI. Those people are wrong. That’s not true. The intelligence improvement we’ve seen from models in the last six months is astonishing. Zoom out a little bit and you can see agents went from being shitty/not feasible to junior engineer level work in less than a year. Even with GPT-5’s launch disappointment, it’s made large improvements on long-running tasks based on our internal testing. Agents can run for hours - looping without losing direction - so long as they have the right memory harnesses.

Modern models are nearly intelligent enough to cover the processor function in an AGI-level agent. A GPT-5 level system with a next generation memory will be very close to AGI. The problem is not the models. They’re just crippled by context windows and best-efforts-but-still-rudimentary approaches which we call context engineering. One large breakthrough here in self-healing-memory and we’re nearly there.

The next 12 months of memory

Memory (and agent learning) will become front and center in the world of agents and LLMs. It will become the most important topic discussed, and recognized as the final step before AGI. Every model provider will add and improve on memory for their apps after seeing OpenAI’s success with ChatGPT memory (like Claude just did).

Agents accessing long term memory systems using tools will significantly improve, especially as context windows of LLMs grow with scaling. Sleep-time agents will read your emails, your files, and your spreadsheets to structure memory in the background, and real time agents will become near perfect at retrieving information. Your preferences, like how you write and how you act, will quickly be incorporated into every agent.

Automatically injected context that feels natural will take a while, and higher latency for more accurate memory is the tradeoff which everyone will make in the short term.

People will become more locked in to the AI apps they use as those AIs remember them. In consumer apps, people will see more latency before the chat starts but have no idea about the memory system behind everything.

Context rot will be solved by the end of the year, via ‘forgetting’, systems specially designed to clean long running chats, and improvements in in-context retrieval (needle-in-a-haystack).

Self-organizing filesystems will become the norm, with people just accessing info via agents instead of navigating a tree (Replit and Lovable are examples of this in code gen).

In spring of next year (2026), there will be a breakthrough on continuous learning - probably some combination of new multimodal LLM research and outside-of-attention retrieval systems. Then, in the following 4 months, the labs will scale that up, combine it with the latest model, and some (like me) will proclaim what they have is early AGI.

Then, someone will finally make a good email assistant.

-A

Want to use state-of-the-art memory systems? Join the waitlist at cofounder.co, an agent that works on your startup alongside you.